When building machine learning systems, it’s common to take a model pre-trained on a large dataset and fine-tune it on a smaller target dataset. This allows the model to adapt its learned features to the new data.

However, naively fine-tuning all the model’s parameters can cause overfitting, since the target data is limited.

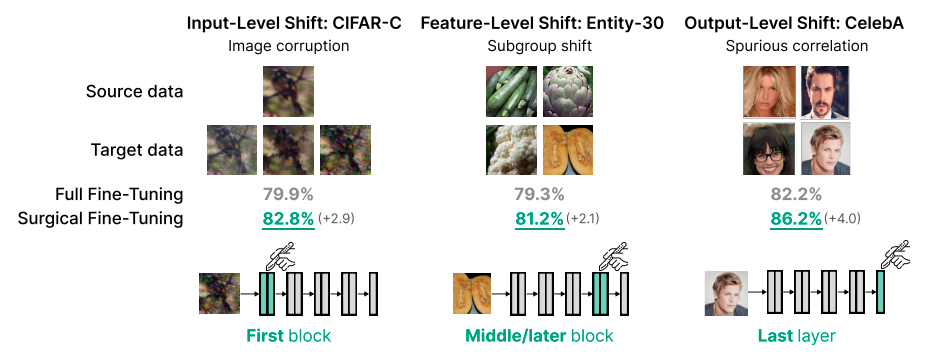

In a new paper, researchers from Stanford explore an intriguing technique they call “surgical fine-tuning” to address this challenge. The key insight is that fine-tuning just a small, contiguous subset of a model’s layers is often sufficient for adapting to a new dataset.

In fact, they show across 7 real-world datasets that surgical fine-tuning can match or even exceed the performance of fine-tuning all layers.

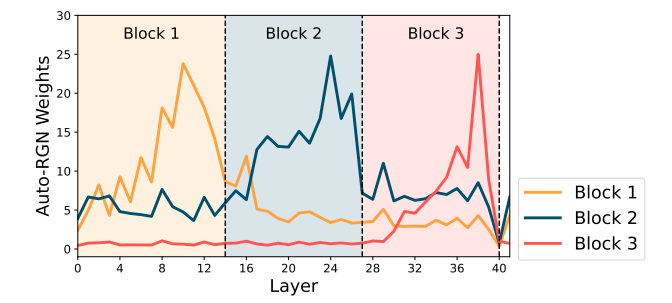

Intriguingly, they find that the optimal set of layers to fine-tune depends systematically on the type of distribution shift between the initial and target datasets.

For example, on image corruptions, which can be seen as an input-level shift, fine-tuning only the first layer performs best.

On the other hand, for shifts between different subpopulations of the same classes, tuning the middle layers is most effective. And for label flips, tuning just the last layer works best.

The researchers explain this phenomena using the idea that different types of distribution shifts only require modifying certain portions of a model.

By selectively fine-tuning, surgical tuning prevents unnecessary changes to other parts of the network, avoiding overfitting.

This insight is supported by theoretical analysis of simplified 2-layer networks.

Overall, surgically fine-tuning a subset of layers is a simple but effective technique to adapt pre-trained models.

The surprising finding that the optimal layers depend on the shift type sheds new light on how neural networks learn and adapt.

In the future, automatically determining the right layers to tune can make transfer learning even more powerful.