Introduction

Recent advances in natural language processing have led to large transformer-based language models that exhibit impressive few-shot learning abilities. Specifically, when trained at sufficient scale, these models can learn new language tasks after being prompted with just a few examples, without any further gradient updates.

However, despite these impressive capabilities, large language models are limited to only understanding text. These models cannot process inputs in other modalities like vision, which prevents us from directly communicating visual information, tasks, or concepts to the models. We cannot simply show the model an image along with a question and have it understand. The models are effectively ‘blind’ to anything outside of text.

In the paper “Multimodal Few-Shot Learning with Frozen Language Models”, Tsimpoukelli et al. propose an approach called “Frozen” for transferring these few-shot learning capabilities to multimodal tasks involving both language and vision.

Frozen provides a proof-of-concept for open-ended multimodal few-shot learning. While performance is not yet state-of-the-art, it serves as an important baseline for future research on adapting powerful language models to multimodal settings while preserving their sample efficiency.

How It Works

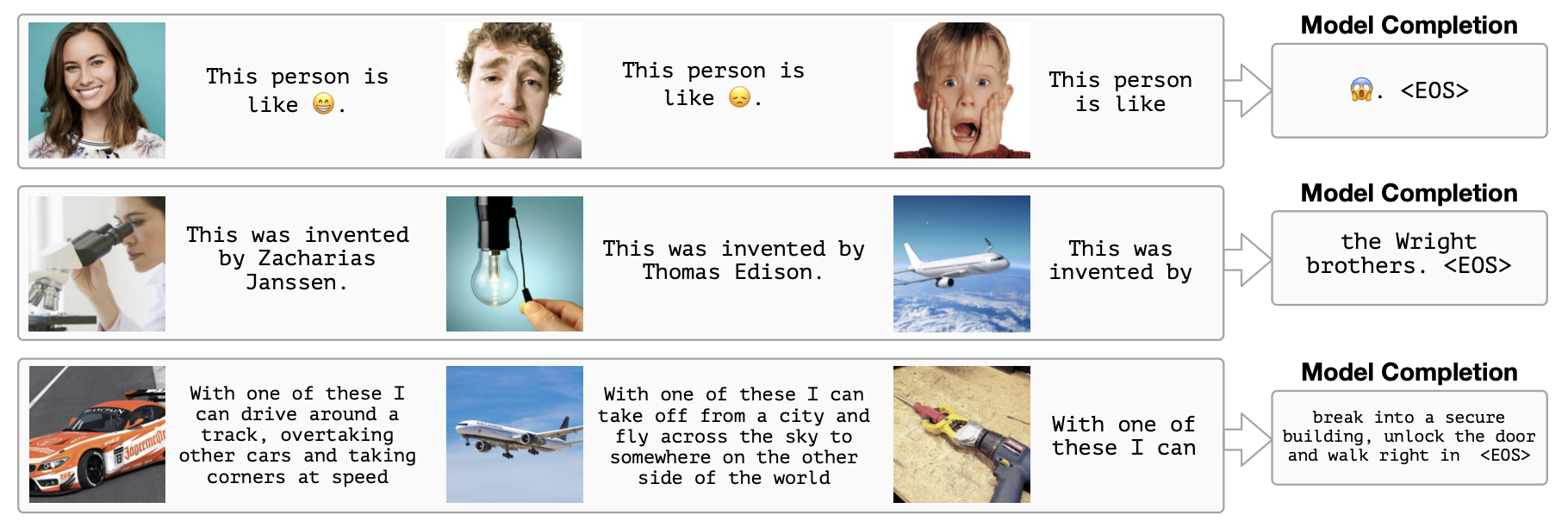

Frozen utilizes a pre-trained, frozen language model along with a trainable vision encoder. The vision encoder is trained to map images into the word embedding space of the language model so that it can generate appropriate captions.

During training, the weights of the large pre-trained language model remain frozen, while gradients are backpropagated through it to train the vision encoder from scratch. This modular approach allows leveraging the capabilities of an existing powerful language model without altering its weights.

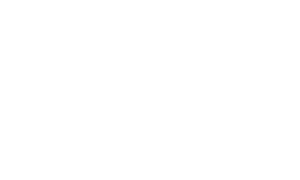

The resulting Frozen model retains the few-shot learning abilities of large language models, but gains the ability to process sequences of text and images. This enables prompting the model with examples of new multimodal tasks before evaluating its performance (e.g., “teaching” the model the name of a new visual category before immediately asking about that category).

The authors demonstrate that Frozen can rapidly learn words for new objects and categories, answer visual questions with only a handful of examples, and make use of outside knowledge. It achieves strong generalization in both zero-shot and few-shot settings on a range of benchmarks.

Frozen Architecture

- Pre-trained language model: A large transformer-based language model pre-trained on text data. Frozen uses a 7 billion parameter model trained on the C4 dataset.

- Vision encoder: A neural network that encodes images into the word embedding space of the language model. Frozen uses an NF-ResNet-50 vision encoder.

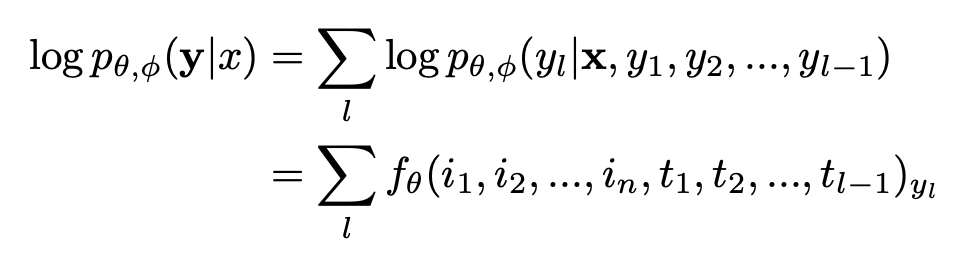

visual_prefix = vision_encoder(x) # => [i1, i2, ..., in]

concatenated_embeddings = [i1, i2, ..., in, t1, t2, ..., tL]

The concatenated embeddings are passed into the frozen language model, which is trained to generate a caption text given an image.

Training Methodology

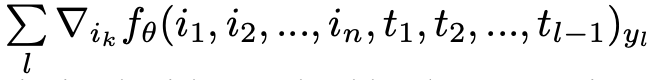

The authors train the Frozen model on the Conceptual Captions dataset, which contains around 3 million image-caption pairs. The training objective is caption generation, where the model learns to generate an appropriate caption y for a given image x (train φ to maximize the likelihood).

This process allows the parameters of the visual encoder to be optimized with standard backpropagation and stochastic gradient descent.

This architecture allows the multimodal model to leverage the powerful capabilities of large pre-trained language models, while learning to effectively incorporate visual information via the tunable vision encoder.

Some key aspects of the training process:

- Early stopping based on perplexity on a validation set, typically after a single epoch.

- Adam optimizer with learning rate 3e-4 and β1 = 0.9, β2 = 0.95.

- Batch size 128.

- Image resolution of 224×224, with padding/resizing for non-square images as needed.

- Relative positional encodings allow the model to generalize to prompts with images in different positions.

- The language model weights θ remain frozen, while training updates only the vision encoder weights φ.

- No task-specific fine-tuning is done – the model learns only from the Conceptual Captions captioning task.

The authors emphasize that keeping the language model frozen is key for multimodal generalization. Finetuning the language model weights was found to hurt performance on downstream VQA tasks compared to the frozen version. (This is likely because there is far less paired image-caption data available compared to the massive text corpora used to pretrain the language model. Given the smaller dataset size, fine-tuning disrupts the general knowledge encoded in the language model, hurting transfer to new tasks. Keeping the model fixed preserves its capabilities more effectively than attempting to update it with limited multimodal data.)

The simple captioning training objective, combined with a fixed language model pretrained on diverse text, provides a substantial amount of general knowledge that transfers effectively to novel multimodal tasks.

# Initialize pretrained language model (parameters are frozen)

language_model = PretrainedLanguageModel()

# Initialize vision encoder (based on NF-ResNet-50)

vision_encoder = VisionEncoder()

# Initialize optimizer for the vision encoder only

optimizer = SomeOptimizer(vision_encoder.parameters())

# Initialize dataset and dataloader for image-caption pairs

dataset = ConceptualCaptionsDataset()

dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

# Training Loop

for epoch in range(num_epochs):

for batch in dataloader:

# Zero out the gradients

optimizer.zero_grad()

# Extract image and caption from the batch

images, captions = batch

# Get image embeddings (visual prefix) from vision encoder

visual_prefix = vision_encoder(images) # shape should be [batch_size, n, D]

# Get caption token embeddings from the language model

caption_embeddings = language_model.get_token_embeddings(captions) # shape should be [batch_size, L, D]

# Concatenate visual prefix and caption embeddings

concatenated_embeddings = concatenate(visual_prefix, caption_embeddings) # shape should be [batch_size, (n+L), D]

# Calculate likelihood and loss based on language model

loss = compute_loss_using_language_model(concatenated_embeddings, captions, language_model)

# Backward pass and update vision encoder parameters

loss.backward()

optimizer.step()

# Optional: Evaluate the model, log metrics, save checkpoints, etc.

Evaluation Tasks and Results

The authors evaluate Frozen on a diverse set of multimodal tasks to quantify its few-shot learning capabilities:

Rapid Task Adaptation

- Visual Question Answering (VQA): Frozen achieves 29.5% accuracy zero-shot on VQAv2 by prompt engineering alone. Performance improves to 38.2% with just 4 example image-question-answer triples.

- Outperforms finetuning the language model, which hurts generalization.

Encyclopedic Knowledge

- Outside Knowledge VQA (OK-VQA): Tests answering visual questions requiring factual knowledge. Frozen achieves 12.6% accuracy, with larger language models performing better by leveraging more knowledge.

Fast Concept Binding

- Open-Ended miniImageNet: Learns new names for objects from examples and correctly generates them. Gets up to 58.9% accuracy on 2-way classification.

- Fast VQA: Answers questions about new visual concepts introduced through examples. Reaches 7.9% on this challenging task.

The strong generalization and few-shot improvements across this diverse set of benchmarks highlights Frozen’s capability as an open-ended multimodal learner.

While not state-of-the-art on any individual task, Frozen provides a unified model capable of:

- Zero-shot transfer

- Rapid task adaptation

- Leveraging world knowledge

- Fast concept binding

This demonstrates the viability of adapting powerful language models to multimodal settings while retaining their few-shot learning superpowers.

Conclusion

In this work, Tsimpoukelli et al. present Frozen – an approach for giving large language models the ability to process multimodal inputs involving both text and images. Frozen trains an image encoder to map images into the semantic space of a fixed pre-trained language model.

The resulting model retains the powerful capabilities of language models like few-shot learning, while gaining the ability to handle arbitrary prompts with images and text. Experiments across a diverse set of multimodal benchmarks show that Frozen can rapidly adapt to new tasks, leverage knowledge from pre-training, and learn visual concepts from just a few examples.

While not yet performing at state-of-the-art levels, Frozen provides an important proof-of-concept and baseline for future research on open-ended multimodal few-shot learning.

Directions for improving Frozen include:

- More advanced vision encoder architectures

- Techniques to increase robustness and sample-efficiency

- Scaling up model size and data

- Improved integration of images into the model context

By building on large language models pretrained on diverse corpora, the Frozen approach could lead to more general-purpose multimodal systems that acquire broad world knowledge and reuse this knowledge for rapid learning. This helps take a step towards more human-like multimodal intelligence.